When we talk about Big O and scalability of code, we simply mean when we grow bigger and bigger with our input, how much does the algorithm or function slow down? In this post, we will understand more about Big O, time complexity, and why we need to be concerned about it when developing algorithms.

1. Computational Complexity

In computer science, computational complexity is a field which analyzes algorithms by calculated the amount resources required to execute it. The amount of resources varies based on the size of the input. So, we can understand the complexity is a function of n, where n is the size of the input.

The complexity analysis includes time complexity and space complexity. We will discuss space complexity in the next post while this post will mainly focus on time complexity and how to calculate it from different algorithms.

2. Time Complexity

In computer science, the time complexity is the computational complexity that describes the amount of time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform.

- Best-case: this is the complexity of solving the problem for the best input.

- Average-case: this is the average complexity of solving the problem. This complexity is defined with respect to the distribution of the values in the input data.

- Worst-case: this is the complexity of solving the problem for the worst input of size n.

For example: let's consider the catching Pikachu function that we discussed in the previous post:

const pokemon = ['Pikachu', 'Meowth', 'Bulbasaur'];

function catchPikachu (array) {

for (let i = 0; i < array.length; i++) {

if (array[i] === 'Pikachu') {

console.log('Catch Pikachu!'); return;

}

}

}

catchPikachu(pokemon);Consider the input, we have Pikachu is the first element of the pokemon array. The function just needs to iterate to the first element and finishes so it would be a best-case. However, if we are looking for Meowth instead of Pikachu, it would be an average-case. And finally, if we need to catch a Bulbasaur, it would be the worst-case.

3. Big O Notations and Time Complexities

If you have read my previous post, you may understand to some extend regarding Big O Notation. Below is the definition:

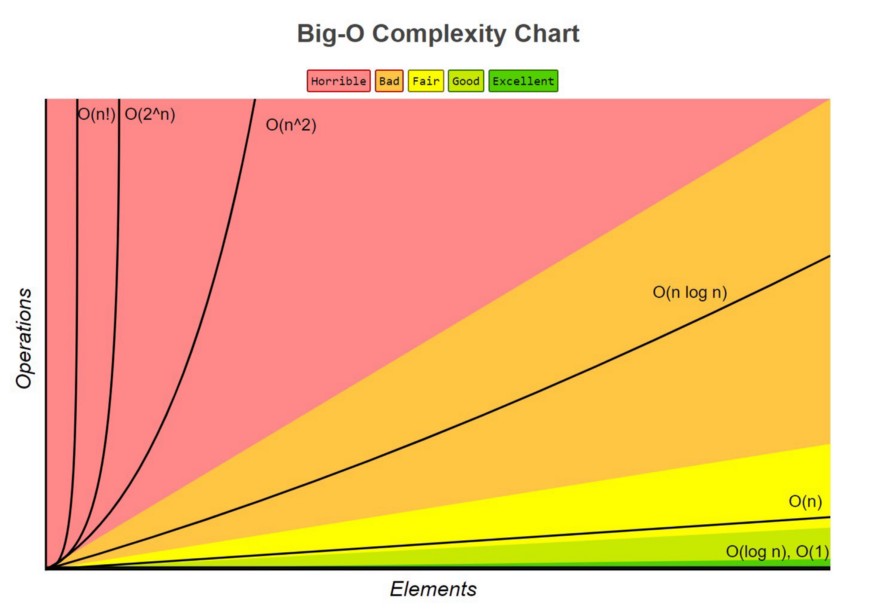

Big O notation, sometimes called “asymptotic notation”, is a mathematical notation that describes the limiting behavior of a function when the argument tends towards a particular value or infinity.

In computer science, Big-O notation is used to classify algorithms according to how their running time or space requirements grow as the input size (n) grows. This notation characterizes functions according to their growth rates: different functions with the same growth rate may be represented using the same O notation.

Let’s see some common time complexities described in the Big-O notation.

| Name | Time Complexity |

| Constant Time | O(1) |

| Logarithmic Time | O(logn) |

| Linear Time | O(n) |

| Quasilinear Time | O(nlogn) |

| Quadratic Time | O(n^2) |

| Exponential Time | O(2^n) |

| Factorial Time | O(n!) |

We have discussed more about Big O notation and Time Complexity when analyzing algorithms. We will focus more in details of each Time Complexity mentioned above in the next post. Thank you for reading my post, see you next time!